Latency and bandwidth are two characteristics that can be measured in communication traffic between computational networks such as the internet.

One of the most misunderstood concepts in network is speed and capacity. Many people believe that speed and capacity are the same thing. When you hear someone say “my internet speed is 300 Mbps” or something similar, what they are really referring to is the bandwidth capacity of the internet service, not the speed. The speed of a network is actually the result of bandwidth and latency.

Surely you have had some slow Internet connection experience, for example, when your children are playing or watching Netflix, DisneyPlus, Amazon Prime in another room, or even SKPE, Team, Zoom, Google Meeting watching Remotes classes. This is because you can only enter a certain amount of data (capacity) in the communication pathway (pipe or tube).

This “way” is like a road, where vehicles are the data, the more congested it is, the slower the traffic will be. The same is true of your data channel, the more congested, the greater the delay in surfing the internet.

Read also

- What are network protocols: Network protocols - what it is

- The main internet protocol: HTTP Protocol

What is Network Bandwidth?

The bandwidth of the network refers to the width of the data channel - capacity, not the speed with which the data is transferred. The transfer rate is measured in latency. And latency means “delay”. Therefore, bandwidth and speed work together.

The wider the pipe or data passing duct, the less time it takes to load web pages and transfer files. This is why when many devices are using your internet it starts to slow down, especially if the volume of data (video streamings for example) being trafficked is very high.

What is latency?

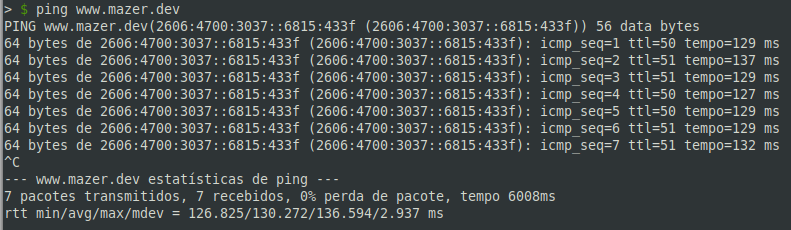

Latency is the amount of time a data package takes to travel from point A to point B. Together, bandwidth and latency define the speed and capacity of a network.Latency is usually expressed in milliseconds and can be measured using a ping command of your computer.

When you run a ping command, a small data package (usually 32 bytes) is sent to another machine, where round trip time is measured in milliseconds. The Ping command measures how long it takes to the data package to leave the home computer, travel to the destination computer and return to the origin computer.

Take the test, open your computer terminal and enter the following command:

ping www.mazer.dev

The result should be similar to the following image, where the return time is latency:

The bandwidth is expressed in bits per second. Refers to the amount of data that can be transferred for a second. Obviously, the wider the tube, the more bits can be transferred per second. And if your bandwidth is congested, your latency (delay) increases.

Think about it as a crowded highway. The more vehicles there is on the highway, the more congested the traffic will be. As a result, everyone is forced to drive more slowly.

Streaming vs. Downloading

Streaming and download are essentially the same thing about bandwidth. The only difference between streaming and download is this:

Downloading, you can’t watch a video before you finish downloading your file.

The streaming, on the contrary, while downloading the file, allows you to watch it.

Youtube, Vimeo, Twich, are services that use streaming. While watching the video, you can still see the progress bar moving in the video player. But as soon as the progress bar is filled, the video is fully downloaded and you can jump back or back to see anywhere in the video.

This article explained in a simplified manner of bandwidth and network latency, if you need more information about it, comments here, in the channel video or my Twitter profile.

Comments